It looks like the next generation of scenery tools (MeshTool 2.0, WED 1.1, and the latest distribution of “the tools”) may have higher system requirements than their predecessors for Mac users. Those requirements would be: an Intel CPU and OS X 10.5.0 or higher.

The problem at the heart of all of these changes is that the tools use CGAL (a geometry library) and the compilers Apple distributes that are compatible with 10.4 and PPC don’t work with CGAL. So I quite literally cannot compile the latest tools because of the features they offer.

I don’t know how much this affects actual authors, and I don’t know if it is possible (given an infinite amount of self-torture) to work around some of the compiler issues. At this point my plan is:

- Distribute the next-gen tools in binary form for 10.5 and x86.

- Leave links to the old tools for users who need binary PPC tools.

- Continue to make all source code available via the GIT repo.

If someone finds a way to compile these tools for older targets using the source code, I am perfectly happy to provide distribution of those binary tools or incorporate the fixes if they are manageable.

I’m hoping to have some tools posted “real soon”…

After about a week of on and off hacking, I have finally knocked down one of the major stumbling blocks to getting WED 1.1 up to beta quality: exporting UV-mapped (texture mapped) bezier polygons that cross DSF borders. It works! Well, sort of.

If you have tried to program polygon cutting algorithms, you can appreciate the difficulty of an algorithm that:

- Clips polygons robustly (including holes and other weird topology) and

- Maintains a UV mapping while doing this and

- Works with bezier curves and not just line segments.

WED now does all three! This was the ugliest and hardest part of the DSF exporter, and a big missing piece from going beta.

Of course, there is one problem: X-Plane can’t read the bezier curves.

The problem is a simple defect in how X-Plane manages DSFs.

- A valid bezier polygon, fully inside the DSF tile, may have control handles that go outside the tile.

- X-Plane can’t handle any DSF coordinates outside the tile.

Doh!

I am not sure what I will do about this, but in the short term, I fear X-Plane will remain limited. Probably the best short term option is to have WED at least flag such problematic bezier polygons; it is possible to approximate them or edit them to make the export work.

There is still a little bit more exporter code to write, including the line segment exporter (which is separate from the polygon exporter), but with luck the whole DSF export path should be cleaned up in the next few days.

Meshes in X-Plane, whether modeled in an OBJ, or generated as the results of other “3-d clutter” (road .net files, .for forest files, etc.) can be either one or two sided. So first: two-sided geometry is bad in most cases.

In order to understand why two sided geometry is bad, we must consider the alternative. The alternative to two-sided geometry is to simply create each triangle twice, with one facing in each direction. We can do this in an OBJ without making new vertices – because vertices are referenced by indices, we only need more indices, and indices are cheap.

Thus we have an alternative to two sided geometry, namely “doubled” one-sided geometry.

The first problem with two sided geometry is performance: for a small number of triangles, it is must faster to simply emit additional indices than to change the drawing mode to two-sided drawing on the CPU.

Thus in an object it is virtually never a good idea to use two-sided geometry. That ATTRibute will always be worse.

What about for the other clutter? Forests are currently always two-sided, but that’s okay; X-Plane enables two sided drawing just once, then draws a huge number of trees. Same with roads. For facades, there is a cost to using two sided geometry, so only use it for facades that must be two sided, like fences; do not use it for buildings.

Now the second problem with two sided geometry is lighting: X-Plane does not calculate lighting values separately for the two sides of the two-sided geometry. So if you have directionally lit models with two sided geometry, the lighting will look wrong. This is the second reason to use doubled geometry instead.

Things Are Starting To Look Up

There is a work-around to this problem of incorrect lighting on two-sided geometry: “up normals”. With up normals, the normal vector for the triangle (which is used to determine how light “bounces” off the triangle) is set to face straight up. The result is a triangle with brightest lighting at high noon, regardless of which way the triangle actually faces.

The good: the triangle looks the same on both sides and has sort of a “flat” lighting – it doesn’t look wrong when the sun is setting. The bad: the triangle has “flat” lighting – it looks non-3d.

We use up normals for forests because the forests are made of two-quad trees…the trees look less fake if directional lighting hints don’t make the two quads as obvious. You can simulate this in ac3d using the “make up normal” command for vegetation quads you put in your own models.

For roads, the geometry is two-sided, so we use up normals to avoid having the back of a road element look funny. Some day we may do something more sophisticated.

Fixing Facade Lighting

Facade lighting behavior will be changed in the next 950 release candidate. Before 950, facades would receive up normals, always. Starting in 950, facades will get correct normals if they are one sided and up normals if they are two-sided. This avoids artifacts with two-sided facades, but will make one-sided closed buildings look much better.

…and it always will be!

Seriously, first, let’s be clear: my opinions do not matter! X-Plane is a small program in a large market (game/graphics hardware) and as I’ve said before, flight simulators are not the early adopters of new tech. So (and this is a huge relief to me) I can do my job without correctly predicting the future of computer graphics.

Keep that in mind as I mouth off regarding ray tracing – I’m just some guy throwing tomatoes from the balcony. X-Plane doesn’t have skin in the game, and if I prove to be totally wrong, we’ll write a ray tracer when the tech scales to be flight simulator ready, and you can point to this post and have a good laugh.

With that in mind, I don’t see ray tracing as being particularly interesting for games. I could make arguments that rasterization* is significantly more effecient, and will keep moving the bar each time ray tracing catches up. I could argue that “tricks” like environment mapping, shadow mapping, deferred rendering, and SSAO have continued to move effects into the rasterization space that we would have thought to be ray-tracing-only. (Heck, ray tracing doesn’t even do ambient occlusion particularly well unless you are willing to burn truly insane amounts of computing power.) I could argue that there is a networking effect: GPU vendors make rasterization faster because games use it, and games use it because the GPU makers have made it fast. That’s a hard cycle to break with a totally different technology.

I don’t really have the stature in the world of computer graphics to say such things. Fortunately John Carmack does. Read what he has to say. I think he’s spot on in pointing out that rasterization has fundamental efficiencies over ray tracing, and ray tracing doesn’t offer enough real usefulness to overcome the efficiency gap and the established media pipe-line.

The interview is from 2008; a few months ago Intel announced that first-generation Larrabee hardware wouldn’t be video cards at all. For all effective purposes from a game/flight simulator perspective, they basically never shipped. So as you read Carmack’s contents re: Intel, you can have a good chuckle that Intels claims have proven hollow due to the lack of actual hardware to run on.

I will be happy to be proven wrong by ray tracing, or any other awesome new technology. But I am by disposition skeptical until I see it running “for real”, e.g. in a real game that competes with modern games written via rasterization. Recoding old games or showing tech demos doesn’t convince me, because you can recode an old game even if your throughput is 1/20th of rasterization, and you can hide a lot of sins in a tech demo.

Heck, while I’m putting my foot in my mouth, here’s another one: unlimited detail. Any time someone announces the death of the triangle, I become skeptical. And their claim of processing “unlimited point cloud data in real time” strikes me as an over-simplification. Perhaps they can create a smooth level of detail experience with excellent paging characteristics (which is great!) but the detail isn’t unlimited. The data is limited by your input data source, your production system, the limits of your hardware, etc. Those are the same limits that a mesh LOD system has now. In other words, what they are doing may be significantly more efficient, but they haven’t made the impossible possible.

That is my general complaint with most of the “anti-rasterization” claims – they assume that mesh/rasterization systems are coded by stupid people – and yet most of the interesting algorithms for rasterization, like shadow mapping and SSAO, are quite clever. Consider these images: saying that rasterization doesn’t produce nice images while showing Half Life 2 (2004, for the X-Box 360) is like saying that cars are not fuel efficient because a 1963 Cadillac got 8 mpg. The infinite detail sample images show a lot of repeated geometry, something that renderers today already do very well, if that’s what was desirable (which it isn’t).

Finally, is in favor of sparse voxel octrees (SVOs). SVOs strike me as the most probable of the various non-mesh-rasterization ideas floating around, and an idea that might be useful for flight simulators in some cases. To me what makes SVOs practical (and in defense of the unlimited detail folks, their algorithm potentially does this too) is that it can be mix-and-matched with existing rasterization technology, so that you only pay for the new tech where it does you some good.

* Rasterization is the process of drawing on the screen by filling in the pixels covered by a triangle with some shading.

I’ll take a break from iPad drivel for a few posts; at least one or two of you don’t already own one. (Seriously, it’s simply easier to blog about X-Plane for iPad because it is already released; a lot of cool things for the desktop are still in develpment.)

In response to my comments on water reflections in X-Plane 950, some users brought up ray tracing.

My immediate thought is: I will start to think more seriously about ray tracing once it becomes the main technology behind first person shooters (FPS).

Improvements in rendering technology come to FPS before flight simulators (and this is true for the combat sims and MSFS series too, not just us). Global shadows, deferred rendering, screens-space ambient occlusion…the cool new tricks get tried out on FPS; by the time they make it into a flight simulator the technology has moved from “clever idea” to “standard issue.”

Consider that X-Plane now finally has per-pixel lighting. Why didn’t we have it when the FPS first did? Well, one reason is that the FPS were cheating. If you look at the papers suggesting how to program per-pixel lighting, at the time there were all sorts of clever techniques involving baking specular reflections into cube maps and other such work-arounds to improve performance. These were necessary because titles at the time were doing per-pixel lighting on hardware that could barely handle it. X-Plane’s approach (as well as other modern games) is to simply program per pixel lighting and trust that your GeForce 8800 or Radeon 4870 has plenty of shader power.

I believe that the reason for the gap between FPS and flight simulators come from two sources:

-

Viewpoint. You can put the camera quite literally anywhere in a flight simulator, and thus the world needs to look good from virtually any position. By comparison, if your game involves a six foot player walking on the ground (and sometimes jumping 10 feet in the air) you know a lot about what the user will never see, and you can pull a lot of tricks to reduce the performance cost of your world based on this knowledge. (This kind of optimization applies to racing games too.

To give one simple example of the kind of optimization a shooter can make that a flight simulator cannot, consider “portal culling”. A portal-culled world is one where the visibility of distinct regions have been precomputed. A trivial example is a house. Each room is only visible through the doors of the other rooms.* Thus when you are walking through a room, virtually no other room is being drawn at all. The entire world is only 20 by 20 meters. Thus the developers know that they have the entire hardware “budget” of computing power to dedicate to that one room and can load it up with effects, even if they are still expensive.

(A further advantage of portal culling is a balance of effects. Because rooms are not drawn together in arbitrary combinations, the developers may find ways to cheat on the lighting or shadowing effects, and they know nothing will “clutter” the world and ruin the cheats.)**

-

Often the FPS will have pre-built content, rather than user-configurable content. Schemes like portal culling (above) only work when you know everything about the world ahead of time and can calculate what is visible where. The same goes for many careful cheats on visual effects.

But a flight simulator is more like a platform: users add content from lots of different sources, and the flight simulator rendering engine has to be able to render an effect correctly no matter what the input. This means the scope of cheating is a lot smaller.

Consider for example water reflections. In a title with pre-made content, the artists can go into the world in advance and mark items as “reflects”, “doesn’t reflect”, reducing the amount of drawing necessary for water reflections. The artist simply has to look around the world and say “ah – this mountain is no where near a lake – no one will notice it.”

X-Plane can’t make this optimization. We have no idea where there will be water, or airports, or you might be flying, or where there might be another multiplayer plane. We know nothing. Everything is subject to change with custom scenery. So we can’t cheat – we have to do a lot of work for reflections, some of which might be wasted. (But it would be too expensive in CPU power to figure out what is wasted while flying.)

Putting it all together, my commentary on ray-tracing is this: the FPS will be able to integrate small amounts of ray tracing first, because they will be in a position to deploy it tactically, using it only where it is really necessary, in hybrid ray-trace + rasterized engines. They’ll be able to exclude big parts of the scene from the ray tracing pass, improving performance. They’ll be able to “dumb down” the quality of the ray trace in ways that you can’t see, again improving performance. The result of all of this will be some ray tracing in FPS when the hardware is just barely ready.

For a flight simulator, it will take longer, because we’ll need hardware that can do a lot more ray tracing work. We won’t know as much about our world, which comes from third party content, so we won’t be able to eliminate visually unimportant ray traces. Like deferred rendering, shadow mapping, SSAO and a number of other effects, flight simulators will need more computing power to apply the effects to a world that can be modified by users.

(Is ray tracing even useful, compared to rasterization? I have no prediction. Personally I am not excited by it, but fortunately I don’t have to make a good guess as to whether it is the future of flight simulation. The FPS will be able to, by effective cheating, apply ray tracing way before us, and give us a sneak peak into what might be possible.)

* There never are very many windows in those first person shooters, are there?

** To be clear: there is nothing negative about the term “cheat” in computer graphics. A way to cheat on the cost of an algorithm means the developers are very good at their jobs! “Cheating” on the cost of algorithm means more efficient rendering. If the term cheating seems negative, substitute “lossy optimization”.

So all kidding aside, my iPad arrived today, and it is a pretty cool device. My normal attitude toward new gadgets is “great, more video driver bugs to fix”, but the iPad is exciting.

I’ll blog some other time about why I think the form factor is important and there is a spot for something bigger than a smart phone and smaller than a laptop.

For now I just want to point out that it flies X-Plane; X-Plane for iPad a bunch of new features, including 2-d panels while you fly, 3-d airports with full taxiway layouts, a completely rebuilt user interface, improved sky and water effects, and even some auto-gen buildings.

Also first impressions of the device itself: it’s really responsive. I have tried to surf the web with my iPod touch (which is based on first-gen iPhone technology) and it’s a tough experience – between the small screen, slow CPU and limited RAM. The iPad surfs the web like a desktop. A very light weight, portable desktop.

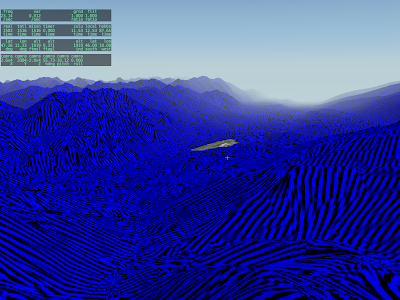

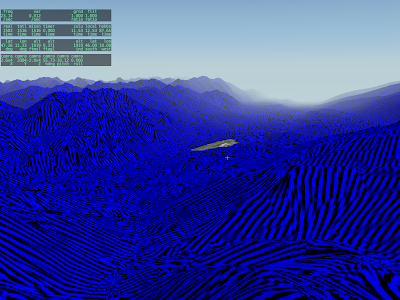

Some of the coolest in-development screen-shots are the bugs. A few weeks ago while working on cloud shaders I managed to create a hybrid of an acid trip and a light-speed trip on Star Wars. Sadly the code has since been modified a thousand times and the effect is gone.

So I’m going to start posting bizarre images as they crop up. Usually they don’t reveal too much about unreleased features.

This came out of some experiments with hills, mountains and UV mapping.

(Clearly what this code needs is a leopard-print texture.)

Normal maps in X-Plane 940 have a funny property: if you flip the normal map horizontally or vertically, the bumps change direction. Things that “stuck out” now “stick in” and vice versa. (If you flip the normal map horizontally and vertically, the two cancel out and the normal map is not reversed.)

You can understand this by thinking of your normal map as a physical piece of metal with bumps punched in it. Flipping it horizontally really means rotating it horizontally to see the other side – now you see the back side of the bumps. Same with the vertical flip. Flip both and you have flipped it twice and it’s front-side forward again.

In a move that is either just in the nick of time or dangerously reckless, I have tweaked the normal map shader for 950 RC1 (coming out “real soon”) to detect and “fix” a flipped normal. In 950 rc1 the bumps in your normal map will always point in the same direction as the normal of your mesh, even if your UV map is flipped horizontally or vertically.

What does this mean to you, the modeler? It means that you can now mirror your normal map from the left to the right side of the airplane and the normal map will still have the bumps “sticking out” like you want.

I crammed this into 950 RC 1 because it looks like it’s a useful change that will restore flexibility to authors making highly detailed airplanes. Mirroring a symmetric airplane (which results in a horizontally mirrored normal map) is a pretty common thing to do, and if the text is applied as decals, this can be a big win in texture space savings.

I figured best to get the tweak in here now so people could take advantage of it. Plus, what’s an RC1 without an RC2?

It goes without saying that this post was, um, inaccurate.

This, however, is real.

I wasn’t planning on discussing this until April 3rd, but some sites have already picked it up, so I might as well explain the why behind it. Simply put: X-Plane 10 will be for the iPad only. I figure that by letting you know now, you can make an informed decision about whether you want to buy an iPad. (Hint: you do!)

This wasn’t an easy decision to make, but here were some of the deciding factors:

-

I spend a lot of my time debugging video drivers, and it makes me very grumpy. You guys seem to think that you can plug anything with copper and wires into your PCIe socket and call it a “video card”. By restricting version 10 to iPad only, we cut down development time by targeting only one GPU.

-

We love everything Steve Jobs does and kiss the ground he walks on. Steve says the pad is magical.

-

The biggest single feature request we get for X-Plane is “can I use X-Plane while operating a motor vehicle.” X-Plane 9’s requirement of 1 GB of RAM, a modern CPU, etc. means that most X-Plane 9 machines are not particularly portable. By making X-Plane 10 iPad only, we can provide X-Plane in a portable format that’s more compatible with flying a flight simulator while driving.

One fringe benefit to cockpit builders: because the iPad is so light and thin, creation of a realistic home cockpit with X-Plane 10 will be easier than ever. Simply take some super-glue, wipe it on the back of your iPad, and simply “stick” the iPad to whatever part of the cockpit you want. It’s so easy a four-year-old could do it!