As you may have seen on our social media, we have new joystick features coming in the next major update. There are two major features here:

- Custom response curves

- Special (semantic) ranges for certain axis types

The first may be of general interest, while the second is almost exclusively useful to hardware makers and custom cockpit builders.

Custom response curves

For as long as I can remember, X-Plane has had a “control response” setting, which makes your controls respond non-linearly. More of your joystick’s range is mapped to the center of the your pitch/roll/yaw axis’s center, and less of the range is devoted to the extremes. This gives you fine-grained controls in the region where the controls are typically used, at the expense of more coarse controls at the limits.

In X-Plane 11, these settings live in the Control Sensitivity window (launched from the bottom of the Settings > Joystick screen), and they will continue to be there in the 11.30 update.

The problem with the existing control response setting, though, is that it applies to all joystick hardware you might plug in. You get just three values—pitch, roll, and yaw—that apply to every axis of that type, no matter the device. Moreover, if you have a different type of axis whose input you want to curve (e.g., throttle, tiller, etc.), you’re simply out of luck.

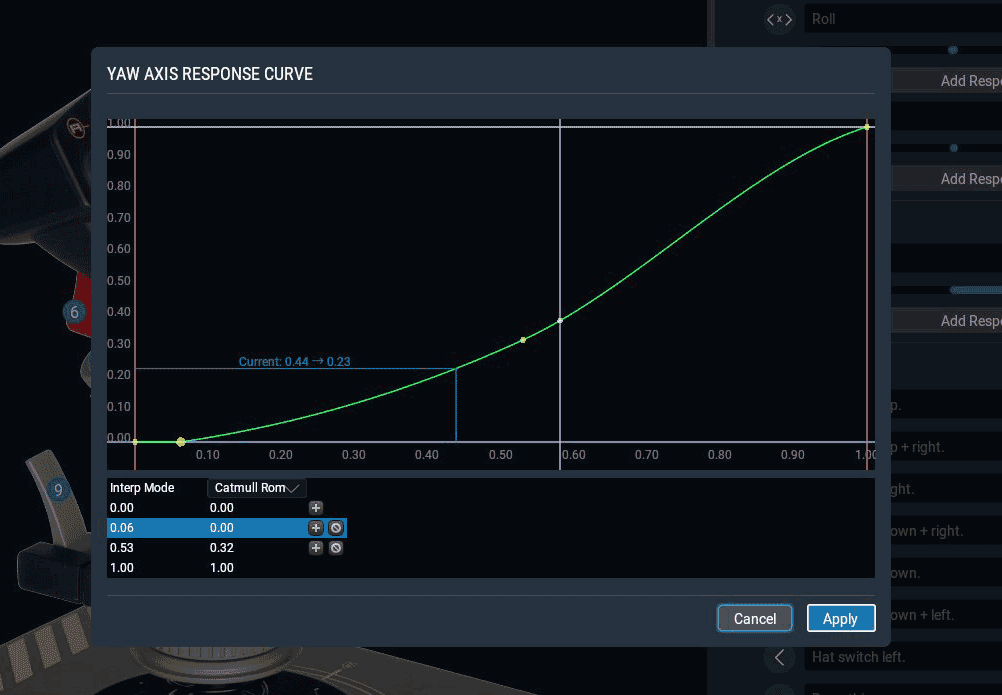

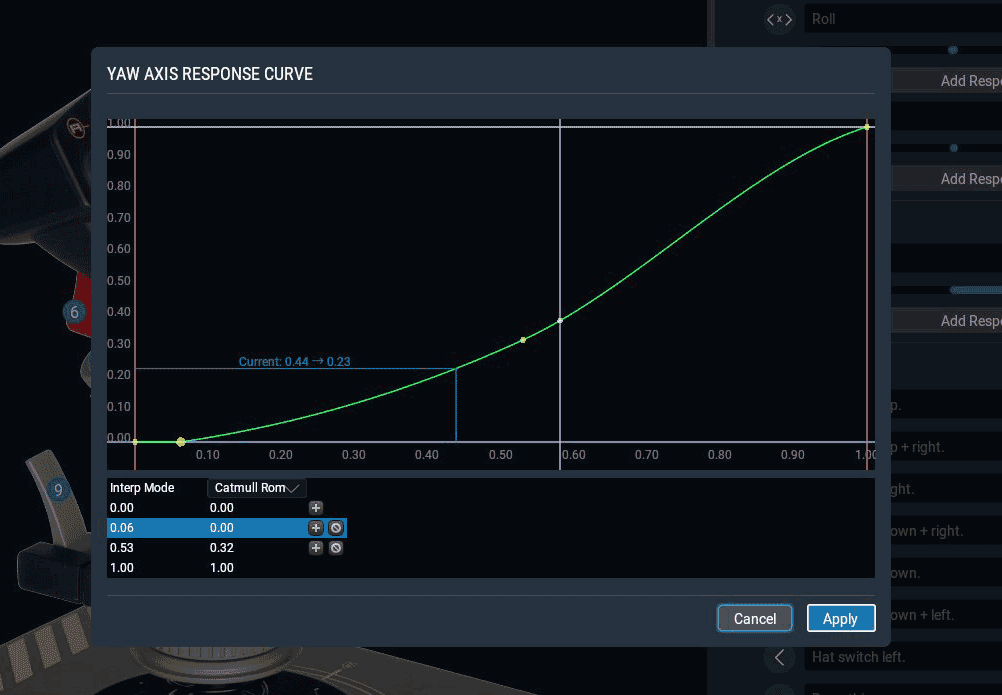

So, in 11.30, we’re adding support for setting custom curves on any axis type. When applied to a pitch, roll, or yaw axis, this will override the global control response curve; applied to other axis types, it will support new functionality not previously available.

These curves are incredibly powerful. They can do things like:

- Manually configure a null zone

- Create a smooth curve (a straightforward replacement for the old “control response” setting)

- Create really complex curves, with loads of control points, and your choice of interpolation method (linear, or one of two methods of smoothing)

But the fun doesn’t stop there!

New semantic ranges

There’s a new component to the curve editor that bears calling out explicitly.

When you’re editing a response curve for certain axis types (throttle, prop, or mixture), you’ll have the option of also configuring the ranges for certain axis-specific behaviors:

- Beta & reverse ranges for throttles

- Feather range for prop controls

- Cutoff range for mixture controls

X-Plane has always set these ranges automatically based on the aircraft model you were flying. For the first time, though, you can configure it yourself to match your hardware.

These are aimed primarily at hardware builders who have physical detents on their controls—you can make X-Plane’s idle point exactly match your throttle’s physical detent, for instance. This makes it possible to build really nice throttle-prop-mixture quadrants that play nicely with X-Plane.

If you’re a commercial hardware maker, and you’d like X-Plane to correctly configure your hardware by default for your users, you can set up both the axis & button assignments and the semantic axis ranges from the settings UI, then click the “Create Default Configuration File” button. Send the file it creates to me (my email is my first name at X-Plane.com) and I’ll get it shipping in the next release.

Nvidia announced their latest bitcoin graphics cards on August 20th at Gamescom this year. Among the usual increase in transistors, they also disappointed all crypto miners by adding a feature that cannot (yet) be used to calculate cryptographic hashes: Ray Tracing! Ray tracing has long been seen as somewhat of a holy grail of graphics rendering, because it’s much closer to replicating the real world than traditional rasterization and shading. However, doing ray tracing in real time has been close to impossible so far. But hey, Nvidia just announced their new RTX GPUs that can do it, so when is X-Plane going to get a fancy ray traced renderer? This and various other questions that have been asked by X-Plane users, as well as some myths, shall be answered! If you have a question that isn’t answered here, feel free to ask it in the comments.

What Nvidia has shown is absolutely impressive. Unfortunately, the fine print of all the marketing hype is that sadly it can’t just be thrown in without engineering effort. The first thing needed is actual RTX hardware, which no one at LR currently has. The second thing needed is a Vulkan-based app; we are getting there, but not in any way that would support RTX. (the whole goal of the Vulkan renderer is to not change the way the world looks, so we’ll first need a shipping production Vulkan renderer.) But then… well, it’s not entirely clear what it takes to actually write a ray traced renderer in all of its details. Nvidia has not yet published the specification for the Vulkan extension (VK_NV_raytracing), but they have published slides from presentations. One thing is very clear: you can’t just copy and paste five lines of Nvidia sample code and suddenly wake up in a ray traced world.

What Nvidia provides is the scaffolding necessary to describe a scene, as well as to provide new types of shaders that allow casting rays from point A to point B and then report back what they hit along the way. This is a huge amount of work that the hardware is providing here, but it’s not the promised “5 lines and you’ll have ray tracing in your application” that’s being promised. To adopt ray tracing you will have to write the whole ray tracer yourself, from scratch; the hardware just enables you to do so now. This is akin to implementing HDR or PBR: Shaders are the base requirement to implement both of these, but once you have shaders you still need to actually implement HDR or PBR on top of them. Another example is building a house and being provided a plot of land that can support it. Sure, it’s great, now you have a place to build your house, but you still have to come up with a blueprint, pick materials to use and then actually build the thing. Implementing ray tracing will take a great amount of engineering effort, nobody is throwing in awesome reflections with every purchase of one RTX2080Ti for free!

The other thing that’s not entirely clear is how well ray tracing will even perform in an environment like X-Plane! Worlds in X-Plane are huge and open, not small scenes from a shooter with tight spacing. Lot’s of rays are needed, and they have to travel quite far, potentially intersecting with large amounts of geometry. How good does the hardware and API scale up to these sizes? Only time will tell. That’s of course not to diminish Nvidias achievement here, it’s an incredible feat of technology in its own right and this is just the first generation!

The other thing worth mentioning is that ray tracing is not just something that Nvidia secretly cooked up in their basement for a decade. This is going to be an industry wide thing, with APIs that will work across vendors! Historically one vendor has come out with a fancy new way to do things which then became the standard adopted by other vendors. Nvidia has come forward and offered their extension as base for a core Khronos extension for Vulkan. They have a vested interested in making a cross vendor, cross platform API available.

In the foreseeable future, rasterizing renderers are unlikely to go anywhere. Rather, ray tracing for the time being can be used for additional effects that are otherwise hard to achieve. Clearly Nvidia is acknowledging this as well by providing a traditional rasterization engine that by itself is more powerful than previous generation ones. This also means that if X-Plane were to adopt ray tracing tomorrow, you could still run it on your old hardware, you’d just get extra shiny on top if you have ray tracing capable hardware.

Last but not least, this is another reason why you should stay away from the shaders! One day we’ll wake up in the glorious Vulkan future which will open the door to the glorious ray tracing future. All of this means that we’ll have to keep changing our shaders.

We posted the system requirements for X-Plane 11 today. Here’re a few notes on the requirements for X-Plane 11.

64-Bit Only

This should be a surprise to no one: X-Plane 11 will be 64-bit only. Add-ons have already gone 64-bit only, over 90% of our user base is already running 64-bit operating systems, and we need 64-bit to be able to utilize the RAM that we need and everyone already has.

Windows: No More XP or Vista

For Windows, we are dropping XP and Vista support and requiring Windows 7 or newer. XP has been end-of-lifed by Microsoft for a while and is therefore not safe to use (due to a lack of security updates).

OS X: Yosemite and Newer

For OS X, we are dropping a number of OS X versions and requiring Yosemite (10.10) or higher. Apple has increased the tempo for OS releases in the last few years, and they don’t provide new drivers to old operating systems, so we are pre-emptively cutting down the set of supported operating systems to cut down the number of different 3-d drivers we have to test.

Linux: Proprietary Drivers Required

On Linux, we will continue to support only the proprietary 3-d drivers from AMD and NVidia; these drivers use the same OpenGL stack, so they let us support Linux without the cost of additional 3-d driver testing. We don’t officially support the Mesa/Gallium stack for Intel GPUs, but X-Plane Linux users have done a bunch of work to make this unofficially work, and we do our best to not undo their work.

Graphics Cards

We’re setting the minimum graphics card at the AMD HD 5000-series line for the red team and the GeForce 400-series for the green team. This ensures that we only support cards with reasonably current drivers, DX11-class capabilities, etc. For Intel, you’ll need at least an HD2000 series or newer; figuring out your Intel motherboard graphics is really tricky because their numbering scheme is crazy, but if you don’t have at least some kind of “HD” graphics, you definitely can’t run.

We recommend a newer graphics card, e.g. at least from the DX12 or newer generations. When it comes to graphics, basically more is more, so whether you need a Titan or Fury or similarly monstrous card depends on things like how big your monitor is.

CPU

CPU requirements are the messiest part of the spec and the source of most of our internal discussion. Simply put, there really aren’t good ways for us to simply state what CPU is going to work well or not with X-Plane. X-Plane itself has a huge range of CPU uses based on configuration, and CPUs have a huge range of actual performance that can be hard to predict from some of the simple headline numbers. Clock rate is absolutely not indicative of performance, nor is core count.

A recommended system is pretty simple: we recommend the Intel i5 6600K, which is the current top-speed gamer targeted i5. You can go lower or older and lose significant performance, or you can go faster and really start to pay a lot more money. If you want to invest in 8 Xeon cores, it may help… but we aren’t going to go tell you to spend that kind of money for a little more performance.

Practical Recommendations

Here are my practical recommendations for X-Plane 11:

- If your machine is just barely getting by with X-Plane 10 at the lowest settings, and those hardware requirements seem high because your machine was built several years ago, you may need to upgrade for X-Plane 11. In this case, it could be a good time to upgrade OS and multiple components.

- If your machine runs X-Plane 10 well, it will almost certainly run X-Plane 11 in some form, with the exception of the very oldest graphics cards. (If you have one of those, I would say your definition for ‘run well’ is a lot lower than mine is.)

- If you need to purchase new hardware, I strongly recommend running X-Plane 11 on your existing hardware first and examining performance of the demo (when available) to see where you’ll need to upgrade.

Real hardware performance is hugely varied by what you are doing and your particular system components, so trying the demo will tell you more than we can hope to figure out from specs.

We’ve seen a few reports (and Philipp has experienced first hand) that X-Plane will crash on startup with the very latest Catalyst drivers that came out last week. The crash appears to only happen if you have a machine with a discrete AMD GPU (e.g. a 7970M) and a built-in GPU on your CPU for low-power use.

We are investigating this with AMD now; in the meantime please use the previous Catalyst driver if you are seeing crashes.

If you have a desktop machine, don’t have an AMD card, or are on Mac or Linux this does not affect you.

TL;DR: 10.36 works around the latest NVidia driver – let X-Plane auto-update and everything will just work the way it should.

X-Plane 10.36 is out now – it’s a quick patch of X-Plane 10.35 that works around what I believe to be a driver bug* in the new NVidia GeForce 352.86 drivers.

10.36 has been posted using our regular update process and has been pushed to Steam, so if you’re running 10.35, you’ll be prompted to update. The update is very small – about 10-12 MB of download.

With this patch, you can now run the latest NVidia drivers. I have no idea if those drivers are good (I have anecdotal reports that they’re both better and worse than the last drivers, but these kinds of reports often have a large ‘noise’ factor**).

We patched X-Plane because we can cut an X-Plane patch faster than NVidia can re-issue the driver, and the driver issue was causing X-Plane to not start at all for any users, which was turning into a customer support mess. Past NVidia-specific patches have been to fix bugs in X-Plane, but in this case, we’re simply avoiding a pot-hole. I hope that NVidia will get their driver fixed relatively soon so that people installing from DVDs with older versions of the sim won’t be stuck.

Update: NVidia fixed this bug in the new 353.06 drivers!

[OpenGL, Windows 8.1 -x86/x64]: GLSL shader compile error. [1647324]

* The bug is that #defines defined within a function body don’t macro-substitute, but #defines outside a function body too. The work-around is to move some #defines out of function bodies. If anyone can find a reason why #defines can’t be in function bodies, please shout at me, but it’s a pre-processor.

** We’ve had reports of huge fps improvements and losses on beta updates where we’ve made only cosmetic changes to the sim.

I’ll post about X-Plane 10.40 next week – but just a quick note: apparently the Rift will ship Windows-only first:

Our development for OS X and Linux has been paused in order to focus on delivering a high quality consumer-level VR experience at launch across hardware, software, and content on Windows. We want to get back to development for OS X and Linux but we don’t have a timeline.

That’s from a much longer blog post describing the Rift’s hardware requirements and the difficulty of moving that many pixels at that high of a framerate with ultra-low latency. (The tl;dr version is that if you have a Windows laptop you probably won’t be able to run the Rift on it.)

I don’t know what the failure mode will be for not meeting requirements, e.g. will the Rift simply not run, or will it do the best it can with degraded performance.

To state the obvious, if there isn’t an Oculus Rift SDK and driver for OS X or Linux, then X-Plane can’t support those operating systems.

When it turns out that a bug that we thought was in an OpenGL driver is actually in X-Plane, I try to make a point of blogging it publicly; it’s really, really easy for app developers to blame bugs and weird behavior on the driver writers, who in turn aren’t in a position to respond. The driver writers bust their nuts to develop drivers quickly for the latest hardware that are simultaneously really fast and don’t crash. That is not an easy task, and it’s not fair for us app developers to blame them for our own bugs.

So with that in mind, what did I screw up this time? This is a bug in the framerate test that caused me to mis-diagnose performance of hardware instancing on NVidia drivers on OS X. Thanks to Rob-ART Morgan of Barefeats for catching this – Rob-ART uses the X-Plane framerate test as one of his standard tests of new Macs.

Here’s the TL;DR version: hardware instancing is actually a win on modern NVidia cards on OS X (GeForce 4nn and newer); I will update X-Plane to use hardware instancing on this hardware in our next patch. What follows are the gory (and perhaps tediously boring) details.

What Is Hardware Instancing

Hardware instancing is the ability to tell the graphics card to draw a lot of copies of one object with a single instruction. (We are asking the GPU to draw many “instances” of one object.) Hardware instancing lets X-Plane draw more objects with lower CPU use. X-Plane’s rendering engine will use hardware instancing for simple scenery objects* when available; this is what makes possible the huge amounts of buildings, houses, street signs, and other 3-d detail in X-Plane 10. X-Plane has supported hardware instancing since version 10.0.

The Bug

The bug is pretty subtle: when we run the framerate test, we do not set the world level of detail explicitly; instead it gets set by X-Plane’s code to set up default rendering settings for a new machine. This “default code” looks at the machine’s hardware capabilities to pick settings.

The problem is: when you disable hardware instancing (via the command line, explicit code in X-Plane, or by using really old hardware) X-Plane puts your hardware into a lower “bucket” and picks lower world level of detail settings.

Thus when you disable hardware instancing, the framerate test is running on lower settings, and produces higher framerate numbers! This makes it look like turning off instancing is actually an improvement in fps, when actually it’s just doing better at an easier test. On my RetinaBook Pro (650M GPU) I get just over 20 fps with instancing disabled vs 17.5 fps with instancing enabled. But the 20 fps is due to the lower world LOD setting that X-Plane picks. If I correctly set the world LOD to “very high” and disable instancing, I get 16.75 fps. Instancing was actually a win after all.

Was Instancing Always a Win?

No. The origin of this mess was the GeForce 8800, where instancing was being emulated by Apple’s OpenGL layer. If instancing is going to be software emulated, we might as well not use it; our own work-around when instancing is not available is as fast as Apple’s emulation and has the option to cull off-screen objects, making it even faster. So I wrote some code to detect a GeForce 8800-type GPU and ignore Apple’s OpenGL instancing emulation. Hence the message “Disabling instancing for DX10 NV hw – it is software emulated.”

I believe the limitations of the 8800 are shared with the subsequent 9nnn cards, the 1nn, 2nn and 3nn, ending in the 330M on OS X.

The Fermi cards and later (4nn and later) are fundamentally different and can hardware instance at full power. At the time they first became available (as after-market cards for the Mac Pro) it appeared that there was a significant penalty for instancing with the Fermi cards as well. Part of this was no doubt due to the framerate test bug, but part may also have been a real driver issue. I went back and tried to re-analyze this case (and I revisited my original bug report to Apple), but X-Plane itself has also changed quite a bit since then, so it’s hard to tell how much of what I saw was a real driver problem and how much was the fps test.

Since the 480 first became available on the Mac, NVidia has made significant improvements to their OS X drivers; one thing is clear: as of OS X 10.9.5 instancing is a win and any penalty is an artifact of the fps test.

What About Yosemite?

I don’t know what the effect of instancing is on Yosemite; I wanted to re-examine this bug before I updated my laptop to Yosemite. That will be my next step and will give me a chance to look at a lot of the weird Yosemite behavior users are reporting.

What Do I Need To Do?

You do not need to make any changes on your own machine. If you have an NVidia Mac, you’ll get a small (e.g. < 5%) improvement in fps in the next minor patch when we re-enable instancing.

* In order to draw an object with hardware instancing, it needs to avoid a bunch of object features: no animation, no attributes, etc. Basically the object has to be simple enough to send to the GPU in a single instruction. Our artists specifically worked to make sure that most of the autogen objects were instancing-friendly.

A few weeks ago AMD posted a beta driver for newer Radeon HD cards that fixed some drawing artifacts with X-Plane. They have now released an official, non-beta WHQL that works with X-Plane. So if you have a newer AMD card on Windows, I suggest updating to the new Catalyst 14-4 driver.

An update on the state of drivers:

- AMD’s latest Catalyst Beta (Catalyst 14.2 V1.3) fixes the translucency artifacts in HDR mode. This driver also supports the newest cards and has correct brightness levels in HDR mode, so if you’re an AMD user on Windows, this is the driver to use. This change hasn’t made it to the Linux AMD proprietary driver, but it probably will soon.

- I have received reports of faint red lines on the latest NVidia Windows WHQL drivers (334.89) under cloudy conditions, but neither Chris nor I have been able to reproduce them. If you can reproduce this, please file an X-Plane bug. (I have not reported this to NVidia because I can’t reproduce it.)

- Some users have reported crashes with Intel HD 4000 GPUs on Windows; getting the latest drivers from Intel seems to fix the issue. I don’t have good info on what versions work/don’t work but it appears that plenty of machines have shipped with old drivers for their motherboard graphics. I believe X-Plane does run correctly with the Intel HD4000 series GPUs on Windows as long as the right driver is installed.

- OS X 10.9.2 is out, and I think it may have new drivers (the NVidia .kext files changed versions), but I don’t see any change in framerate for either NV or AMD cards.

Update: NVidia has been able to reproduce the red lines bug – we’re still working out the details of what’s going on, but it’s a known issue now. Thanks to everyone who reported it.

If you find a driver bug on Windows or Linux, please repot this to us (via our bug reporter) and to NVidia, Intel or AMD. I try to bring known bugs directly to the driver teams, but having the bug in their public bug reports is good too – it makes it clear that real users are seeing real problems with a shipping product.

ppjoy users on Windows have been experiencing a crash on startup; this was a bug in X-Plane 10.10/10.11, induced by particular virtual HID devices that only ppjoy could make. I found the problem and it will be fixed in 10.20.

In the meantime, if you need to use ppjoy and want to work around the problem, set your hat switches to discrete directions, not analog. (X-Plane can’t use an analog hatswitch anyway; most people have this because it is a ppjoy default.)

As a side rant to ppjoy users: I was a bit horrified with the process of installing ppjoy. ppjoy is an unsigned driver so I had to turn off driver signing in Windows. ppjoy is also, as far as I can tell, not hosted anywhere official. So I had to install an unsigned driver off of a file locker onto my Windows machine with the safeties off.

To be clear, I do not think that this is the author’s fault. He is making freeware, and the only thing that would remedy these problems is money. I do not and cannot expect him to give up not only his time (to code) but also pay to solve the distribution problems of official hosting and buying a signing certificate.

Still, the process of taking off all of the safeties to put random third party binary software on my Windows box was unnerving and not something I would ever do as an end-user.

As far as I know, the ppjoy crash and the PS3 controller crash are the only two known regression bugs* with joystick hardware, and they’ll both be fixed in 10.20. (Linux users, needing to edit udev rules to use hardware is not something that we consider to be a bug – see this post.)

When will 10.20 go final? Real soon now. Plugin authors, if you aren’t already running on 10.20 betas, you should have been doing that weeks ago.

* Regression bug means: it used to work in 10.05 and stopped working in 10.10 when we rewrote the joystick code.