I have set up a public bug database for the scenery tools. The reason is that I don’t have much continuous time to work on the scenery tools – it’s very stop and go and will be for a while. The bug base lets you create a permanent record of a problem that I can’t lose track of in the heat of whatever is on fire today.

WED is almost ready for a beta, but I am just completely swamped with work right now…for WED 1.0 I worked full time on fixing WED bugs for a significant time when WED went beta; this time around I won’t have that kind of time – at least not this year.

So…we’ll see how the bug base works out. My hope is that I can post WED, put it down, and pick up work on it intermittently with the bug base providing a record of what remains to be done.

Regarding other tools, there will be at least one more MeshTool beta with an improved shapefile processing algorithm that will handle broken shapefiles better. The ac3d plugin has some bugs filed against it but it’s possible that they’d be deferred past the 3.2 plugin.

I have been working on OpenStreetMap data for X-Plane this week. Use of OSM for global scenery is going to be a bit different from projects like X-VFR or other specific custom OSM-based scenery.

The issue at hand is accuracy vs. plausibility…

- Accuracy: how much error is there between what exists in the real world and what exists in the scenery. Is that road in the right location? Is it the right type of road?

- Plausibility: does the scenery as a whole look reasonable? Is that road on land or is it in the water? Is that river running up a mountain?

The global scenery needs to prefer plausibility over accuracy. Because we can’t check and manually fix errors in the source data for the entire world, and because we don’t cut the global scenery very often, it is important that the global scenery err on the side of reduced accuracy (remember, the global scenery isn’t that accurate in the first place) rather than plausibility problems that will clearly be ugly and distracting.

The implication for OSM-based global scenery: not everything in OSM is going to show up in the global scenery. This would be true anyway simply due to the need to keep the global scenery compact. (I trust that OSM will grow to the point where it can source scenery larger than we can ship for the entire world.) But the global scenery generator may need to err on the side of not including data that might have plausibility problems.

Fortunately it is possible to build custom scenery from OSM as well. I don’t see OSM-based global scenery as replacing efforts like X-VFR and others; rather custom scenery will always be able to use more OSM data , checking the data for accuracy, rather than reducing the data to maintain plausibility.

One technical note: I am working on an extention to the road .net file format that would allow road networks to be draped over terrain. This would allow overlay packages to add/replace road grids without having to know the shape of the base scenery mesh, and make it easier to both create custom road networks and to create the tools that manipulate them.

I’ve been working with OpenStreetMap (OSM) data this week. The great thing about OSM (besides already containing a huge amount of road data) is that you can edit and correct data – that is, OSM manages the problem of “crowd sourcing” world map source data.

I get email from people all the time, saying “how can I help fix my local area of the global scenery”. With OSM, you can help., by improving OSM’s source data.

Here are two things that will matter in the quality of generated scenery:

- The oneway tag. Roads that are one-way need to have this tag, or the conversion to X-Plane might have an incorrect two-way road in place. If you don’t see the one-way arrows on the OSM map rendering then this tag might be missing.

- The layer tag. When roads cross, “layer” tells OSM which one is on top (and that they do not intersect). Similarly, if a highway is underground, it’s because it has a negative layer. If the layer tag is missing, complex intersections will probably render as junk.

In the US, a lot of OSM is built off an import of the TIGER census road map. Unfortunately TIGER in its previous released form does not contain one-way or layering information. So particularly for US cities, adding these tags will improve the road rendering a lot!

There is a bug in the newly released 931. When:

- Clouds are set to stratus and

- Pixel shaders are on and

- The airplane has an OBJ that uses ATTR_diffuse_rgb

the attribute is ignored, which typically makes things appear white. The primary example that has been reported to me is the throttle quadrant of the Piper Malibu.

This is simply a bug in the shaders (which is failing to apply the diffuse tinting to ambient-only lighting conditions); I have a fix, but I’m not sure how soon it will be released. We will probably do a small bug-fix release with this and one or two other things, but this is yet to be finalized, since Austin is out of the office this week.

I receive bug reports periodically relating to how X-Plane and the scenery tools handle badly formed scenery files. Here’s the rules:

- It is a bug in the scenery tools if they create illegal scenery data. They should at least flag the condition that is leading to an illegal file and refuse to proceed.

- It is a bug in the scenery tools if they crash when trying to import illegal scenery data. Crashing can mean data loss, which is bad.

- X-Plane’s behavior with regards to illegal scenery data is undefined! This means it is not a bug for X-Plane to show a certain behavior with bad input data.

- There is no guarantee that X-Plane’s response to illegal scenery data will remain constant over multiple patches, or even multiple executions of the sim.

This third point has some dangerous consequences:

- X-Plane might handle illegal data in a way that an author views as useful; this “useful” side effect might go away in a future version.

- X-Plane might crash in response to illegal data…sometimes.

- There is no guarantee that X-Plane will provide useful diagnostics.

The reason for this scheme is that X-Plane is under pressure to get scenery loaded quickly, so at some point, there is a limit to how much validation it can do.

With that in mind, I do try to validate scenery when a problem is very common and the validation is not very expensive, or when there are serious data quality problems. But as we move to having the tools do more validation, we can have validation where we need it: immediately, for the authors working on the scenery.

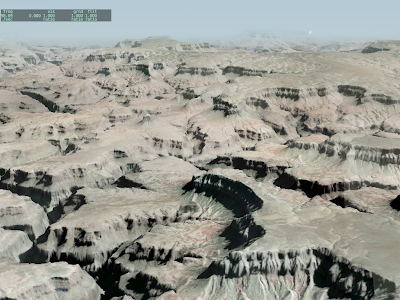

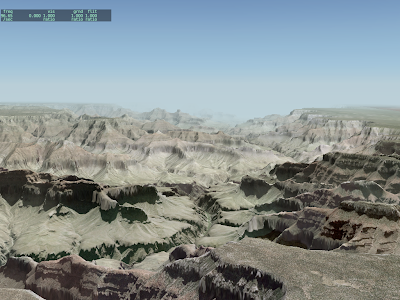

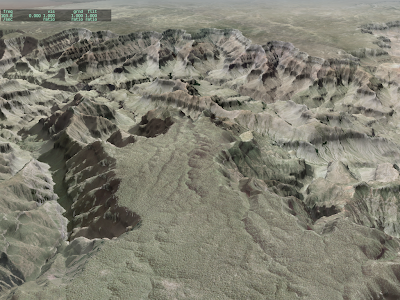

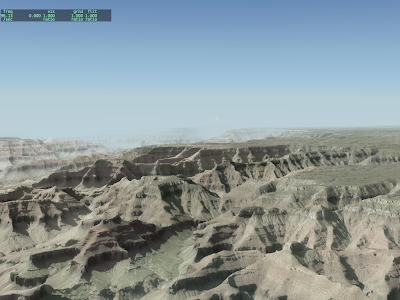

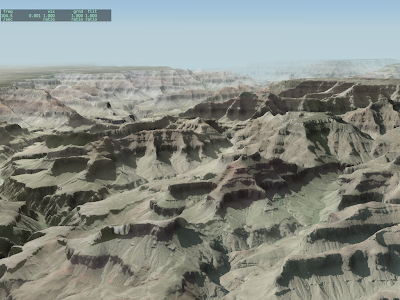

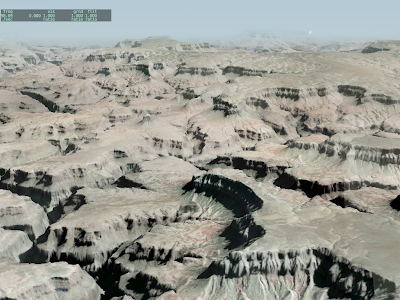

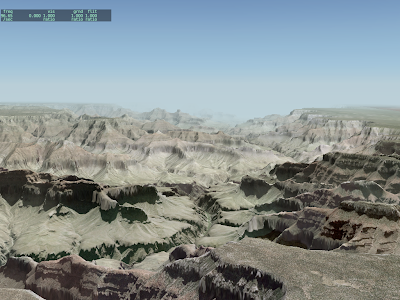

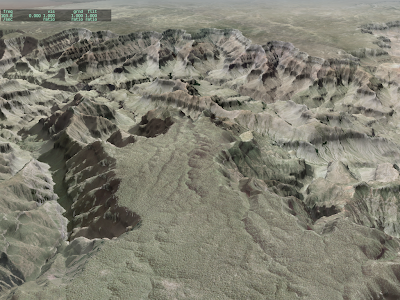

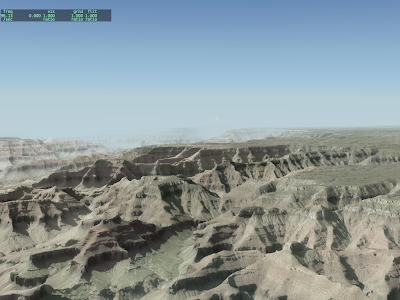

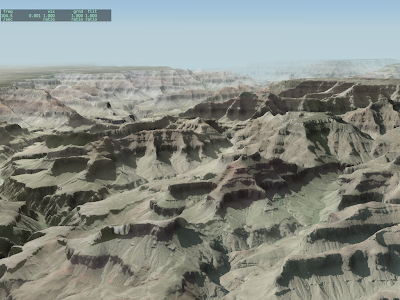

To answer the most basic questions:

- This is a base mesh orthophoto scenery I made with MeshTool, as a test.

- The source DEM is 10m NED, the source imagery is 1mpp DOQQ, down-scaled to 3mpp. I gave MeshTool a point budget of 500,000 mesh points per DSF tile, and it used them all.

- This version of MeshTool (2.0 beta 2) should be out in the next 3 days.

- That’s X-Plane 930RC4. The framerate really is around 100 fps.

- There are no dynamic real-time shadows. Rather, the orthophotos have the shadows “baked” in because they’re photos.

- There are artifacts at the joins of the orthophotos because I spent time fixing projection errors.

Clearly we need more than 25 nm visibility in some cases!

I have received several requests for a transparent runway with a physical surface type. That request is just strange enough that we need to look back and ask, “how did we get here?”

High Level and Low Level Modeling

The “new” airport system, implemented in X-Plane 850 (with a new apt.dat spec to go with it) is based on a set of lower level drawing primitives, all of which are available via DSF. In other words, if Sergio and I can create an effect to implement the apt.dat spec, you can make this effect directly with your own art assets using a DSF overlay. This relieves pressure on the apt.dat spec to become a kitchen sink of tiny details.

The goal of apt.dat is to make a visually pleasing general rendering of airport data. DSF overlays provide a modeling facility.

Little Tricks

It turns out there are two things the apt.dat file “does” with the rendering engine that you can’t do in an overlay DSF:

-

The apt.dat file registers runways in the airport dialog box (for starting flights, positioning the airplane, etc.).

-

When the apt.dat reader places OBJs to form approach lights, it can offset their “timing base”, which is why the rabbit flashes in sequence.

(If you were to place a sequence of approach lights with rabbits in an overlay, every single light would flash at the same time because the DSF overlay format does not have a way to adjust the object’s internal timing parameter.)

The solution: the transparent runway. The idea of the transparent runway is to create with the apt.dat file the two aspects of a runway that you can’t build with a DSF overlay: the approach lights and the entry in the global airport dialog box. Transparent runways leave the drawing and surface up to you.

My thinking at the time was that the actual runway visuals and physics would be implemented together via either draped polygons or a hard OBJ.

Orthophotos and Bumps

So why do authors want a transparent but hard runway? The answer is orthophotos. With paged orthophotos, it is now possible to simply put down orthophotos for the entire airport surface area (whether as overlays or a base mesh) at some high resolution (our runways are 10 cm per pixel – I’m not sure if the whole airport area can be done at that resolution) and not have any special overlays for the runways. The transparent + hard runway would change the surface type.

I’m not sure if this is a good idea, but I’m pretty sure that this feature belongs in overlay DSFs and not the apt.dat file.

- Such a technique (varying hard surfaces independent of a larger image) is useful for more than just airports (and certainly more than just runways).

- The technique is unnecessary unless a DSF overlay is in use.

- Unlike nearly all of the rest of the apt.dat file, such an abstraction (invisible but bumpy) is much more a modeling technique and less a description of a real world runway.

I’m not sure we would even want the runway outline to be the source of hard data. If there are significant paved areas outside the runway then a few larger hard surface polygons might be more useful.

The dataref documentation on the X-Plane SDK website is updated for each release of X-Plane 9.

X-Plane 930 has not yet been released. It is a “release candidate” but since we haven’t signed off on it yet, 922r1 is still the most current real release of X-Plane, and it’s what users get when they update without asking for a beta. So the website has 922 datarefs.

Since version 9, every release of X-Plane (including betas and RCs) has a copy of datarefs.txt in the plugins folder that is correct for that release. In other words, the docs that ship with X-Plane and the sim itself are always in sync.

So for now use datarefs.txt in the 930 RC 2 plugins folder! When 930 goes final, the website will be updated.

A few days ago, the ASTER GDEM was released. Basically ASTER GDEM is a new elevation set with even greater coverage than the SRTM. Basically both SRTM and ASTER (I’ll drop the GDEM – in fact ASTER prodcues more than just elevation data, but the elevation data is what gets flight simmers excited right now) are space-based automated measurement of the earth’s height. But since ASTER is on a satellite (as opposed to an orbiting space shuttle) it can reach latitudes closer to the poles.

So what does this mean for scenery? What does it mean for the global scenery? A few thoughts:

-

ASTER data is not yet very easy to get. You can sign up with the USGS distribution website but you’re limited to 100 tiles at a time, with some latency between when you ask and when you get an FTP site. Compare this to SRTM, which can be downloaded automatically in its entirety, or ordered on DVD. ASTER may reach this level of availability, but it’s not there yet.

-

ASTER is, well, lumpy. (Nasa says “research grade”, but you and I can say “lumpy”.) Jonathan de Ferranti describes ASTER and its limitations in quite some detail. Of particular note is that while the file resolution is 30m, the effective resolution of useful data will be less.

SRTM has its defects, too, but ASTER is very new, so the GIS community hasn’t had a chance to produce “cleaned up” ASTER. And clean-up matters; it only takes one really nice big spike in a flat flood plane to make a “bug” in global scenery. I grabbed the ASTER DEMs for the Grand Canyon. Coverage was quite good, despite the steep terrain angle (steep terrain is problematic by design for SRTM) but there were still drop-out areas that were filled with SRTM3 DEMs, and the filled-in area was noticeable.

-

By the numbers, ASTER is not as good as NED; I imagine that other country-specific national elevation datasets are also both more accurate and more precise than ASTER.

-

The licensing terms are, well, unclear. The agreements I’ve seen imply a limited set of research uses for the data. The copyright terms are not well specified.

So at this point I think ASTER is a great new resource for custom scenery, where an author can grab an ASTER DEM in a reasonable amount of time, check it carefully, and thus have access to high quality data for remote parts of the Earth, particularly areas where locally grown data is not available or not high quality.

In the long term, ASTER is a huge addition to the set of data available because of its wide-scale coverage of remote areas, and because it can fill holes in SRTM. (ASTER and SRTM suffer from different causes for drop-outs, so it is imaginable that there won’t be a 1:1 correlation in drop-outs.)

But in the short term, I don’t think ASTER is a SRTM replacement for global scenery; void-filled SRTM is a mature product, reasonably free of weirdness (and sometimes useful data). ASTER is very new, and exciting, but not ready for use in global scenery.

FSBreak interviewed Austin last week….it’s an interesting listen and they cover a lot of ground. A few comments on Laminar’s approach to developing software:

That Code Stinks!

Austin is absolutely correct that we (LR) write better software because neither of us are shy about telling the other when a piece of code stinks. But I think Austin deserves the credit for creating this environment. An “ego-free” zone where people can criticize each other honestly and freely is a rare and valuable thing, in many domains, not just music.

When I first came to LR, Austin created this environment by responding positively to feedback, no matter how, um, honest. When I first came to LR 100% of the code was written by him and 0% by me. Thus if I was going to say “this piece of code really needs to be different”, it was going to be Austin to either run with it or try to defend his previous work.

To his credit, Austin ran with it, 100% of the time. I can’t think of a single time that he didn’t come down on the side of “let’s make X-Plane better”. That set the tone for the environment we have now: one that is data driven, regardless of who the original author is.

I would say this to any programmer who faces a harsh critique of code: good programmers write bad code! I have rewritten the culling code (the code that decides whether an OBJ really needs to be drawn*) perhaps four times now in the last five years. Each time I rewrote the code, it was a big improvement. But that doesn’t make the original code a mistake – the previous iterations were still improvements in their day. Programming is an iterative process. It is possible to write code that is both good and valuable to the company and going to need to be torn out a year later.

A Rewrite Is Not A Compatibility Break

Austin also points to the constant rewriting of X-Plane as a source of performance. This is true too – Austin has a zero tolerance policy toward old crufty code. If we know the code has gotten ugly and we know what we would do to make the code clean, we do that, immediately, without delay. Why would you ever put off fixing old code?

Having worked like this for a while, I am now amazed at the extent to which other organizations (including ones I have worked in) are willing to put off cleaning up code organization problems.

Simply put, software companies make money by changing code, and the cost is how long the changes take. If code is organized to make changing it slower, this fundamentally affects the financial viability of the company! (And the longer the code is left messy, the more difficult it will be to clean up later.)

But I must also point out one critical detail: rewriting the code doesn’t mean breaking compatibility! Consider the OBJ engine, which has been rewritten more times than I care to remember. It still loads OBJ2 files from X-Plane 620.

When we rework a section of the sim, we make sure that the structure of the code exactly matches what we are working on now (rather than what we were working on two years ago) so that new development fits into the existing code well. But it is not necessary to drop pieces of the code to achieve that. I would describe this “refactoring” as straightening a curvy highway so you can drive faster. If the highway went from LA to San Francisco, it still will – just in less time.

In fact, I think the issue of compatibility in X-Plane’s flight model has a lot more to do with whether the goal is to emulate reality or past results. This debate is orthoganal to refactoring code on a regular basis.

* Since OBJs are expensive to draw and there are a huge number of OBJs in a scenery tile, the decision about whether to draw an OBJ is really important to performance. Make bad decisions, you hurt fps. Spend too long debating what to draw, you also hurt fps!